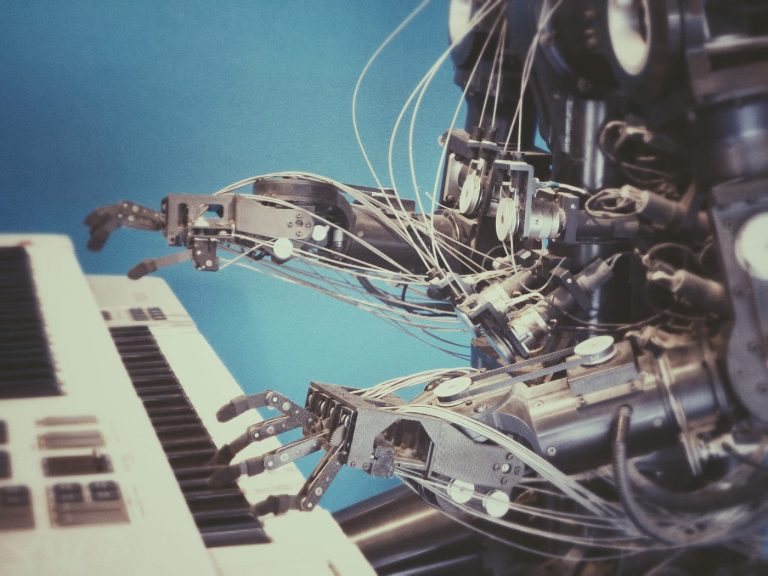

In recent years, machines have become remarkably adept at understanding complex human expressions, from deciphering languages to interpreting music. This intersection of artificial intelligence (AI) and the arts raises the question: How do machines understand music and language?

While humans rely on intuition, emotions, and years of cultural context, machines use data, algorithms, and pattern recognition to make sense of these rich, nuanced forms of communication. Understanding how machines process music and language reveals a fascinating blend of technology, science, and art.

In this article, we’ll dive into the key processes that enable machines to understand and create music and language, the role of AI, and what this means for creative industries and everyday users.

The Intersection of Music and Language

Before diving into the mechanics of how machines understand these mediums, it’s important to recognize the connection between music and language. Both are systems of communication, built on patterns, structures, and emotions. Music and language rely on rhythm, tone, and pitch to convey meaning and emotion.

Linguists and music theorists alike have long observed the similarities between the two, which is why it’s not surprising that machines can use similar techniques to decode and generate both. Modern AI models trained in natural language processing (NLP) and music generation are capable of analyzing texts and melodies, finding patterns, and even predicting future notes or words based on prior inputs.

How Machines Learn to Understand Language

When it comes to language, machines utilize a technology called natural language processing (NLP). NLP allows machines to analyze, understand, and generate human language. Here’s how it works:

- Tokenization: Machines break down sentences into smaller pieces, often words or phrases, known as tokens. This allows the machine to understand the individual components of a sentence.

- Context Understanding: Using advanced algorithms like neural networks, machines learn the context of a word within a sentence. This is essential because words often have multiple meanings depending on their context.

- Pattern Recognition: AI models are trained on vast amounts of text data, learning to recognize patterns in language use, grammar, and syntax. Over time, the machine learns to predict the next word in a sentence or to complete sentences based on patterns it has learned.

- Semantic Analysis: Understanding language isn’t just about grammar and vocabulary; it also involves meaning. Semantic analysis allows machines to determine the sentiment or intent behind a sentence, helping it respond more naturally.

The culmination of these techniques allows machines to understand language and even converse with humans in a way that feels natural.

Example of NLP in Action

One notable example of NLP in action is OpenAI’s GPT-3, an AI model that can generate human-like text based on a given prompt. It’s trained on vast amounts of text and can produce everything from news articles to poetry, making it one of the most advanced language models in existence.

How Machines Understand Music

The process of teaching machines to understand music is somewhat similar to NLP but involves different data sets and training methods. Music generation models rely on algorithms that can recognize patterns, melodies, and structures in music. Here’s how machines interpret music:

- Melodic Analysis: Machines are trained on large datasets of music, enabling them to identify melodies, chord progressions, and harmonies. By analyzing thousands of songs, machines learn common musical patterns.

- Rhythm and Tempo Recognition: Machines can break down a song into beats, identifying rhythmic structures. This helps AI predict where the next note or beat should occur, just as it predicts words in a sentence.

- Genre Identification: Just as humans can categorize music by genre, machines are taught to recognize different styles of music by analyzing their characteristics. This allows AI to generate new compositions in specific genres.

- Emotion Detection: Music often conveys emotions, and AI can be trained to recognize and replicate these emotional cues by analyzing the tone, tempo, and key of a composition.

AI and Music Generation: A Perfect Match

Artificial intelligence has opened new doors for music creation. By analyzing existing compositions, machines can create original music, offering a way for people without musical backgrounds to compose songs. AI tools like Amper Music and AIVA are capable of producing music based on a user’s preferences, with little to no manual input required.

For those looking to compose music from text, AI technology now bridges the gap between language and music. For instance, AI that helps to use a text to music AI generator can take a poem or written text and turn it into a melody. This merging of text analysis and music generation shows how AI can bring different art forms together.

Real-World Example of AI in Music

OpenAI’s MuseNet is an AI model that can generate 4-minute musical compositions with 10 different instruments in a wide variety of styles, from classical to jazz. Trained on data from thousands of MIDI files, MuseNet has learned to produce music that is nearly indistinguishable from human-composed works.

Challenges in Teaching Machines to Understand Music and Language

Despite impressive progress, there are still limitations in AI’s understanding of music and language. While machines can recognize patterns and replicate them, they lack the deep emotional understanding that humans bring to art. For instance, while an AI model can predict what note should come next in a song, it may not grasp the emotional nuance or creative intent behind that choice.

Additionally, teaching machines to understand abstract concepts, such as the feeling of nostalgia evoked by a certain piece of music or the subtext in a poem, remains a challenge. However, as AI continues to evolve, these limitations are gradually being overcome.

Conclusion

The ability of machines to understand music and language represents a significant achievement in AI research. By using complex algorithms and neural networks, AI is capable of not only analyzing but also generating music and text that feels deeply human.

For musicians, poets, and content creators, the potential of AI opens up exciting new possibilities. Whether it’s creating original music through AI-driven tools or using NLP models to generate text, the future of AI in music and language is full of potential. As technology continues to advance, the boundary between human creativity and machine intelligence will continue to blur, creating a fascinating space for innovation.